景派HPC研究院丨Intel oneAPI环境变量配置&运行一个PyTorch程序

景派HPC研究院

由景派科技的技术团队组成,主要分享高性能计算领域的技术信息,与解答各位朋友在技术上遇到的问题和瓶颈,欢迎大家在文章下方的评论区讨论与提问。

- 早期的两篇文章(https://blog.csdn.net/weixin_44506674/article/details/110413364、https://blog.csdn.net/weixin_44506674/article/details/110990718)我简单介绍了Intel oneAPI的安装以及关于Intel oneAPI各大工具包的一些基础知识,从本篇文章开始将正式讲解如何使用Intel oneAPI中的AI工具包。

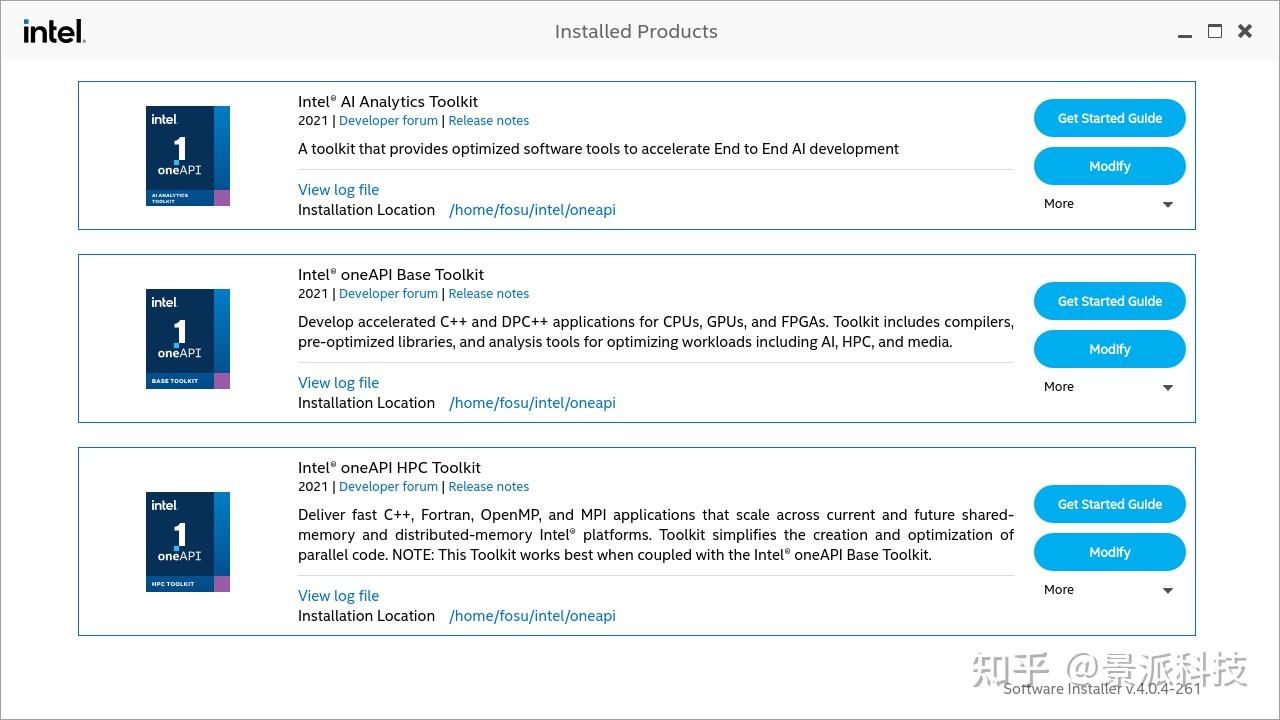

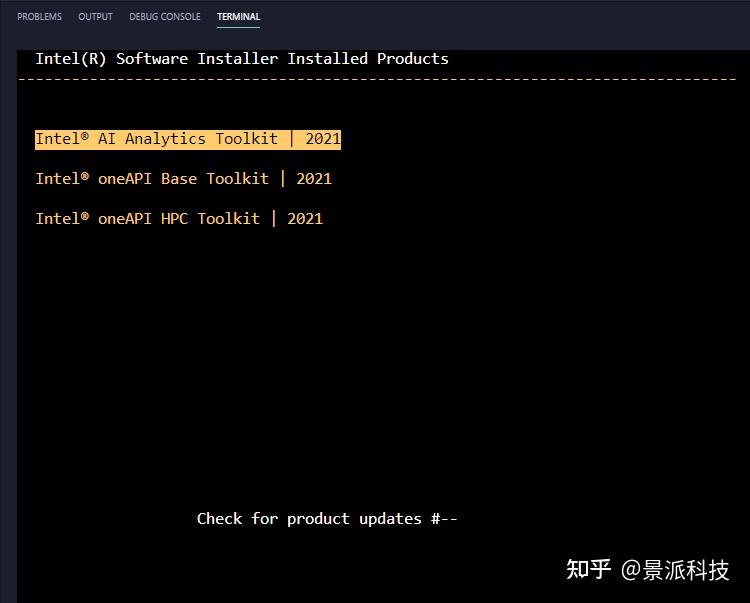

1. 查看已安装的oneAPI工具包

运行 oneAPI 安装路径(默认为~/intel/oneapi)下的installer程序即可查看自己安装了哪些 oneAPI 工具包。

以我自己的为例,运行如下命令(具体路径以自己实际设置为准)

cd ~/intel/oneapi/installer/

./installer如果你的连接工具支持X11转发,则会看到如下图形界面:

如果你的连接工具不支持X11转发,则只会进入文字界面如下图所示:

Intel 的所有程序都是既有图形界面又有文字界面的,可以说考虑得非常周全了,必须点赞。

2. 设置oneAPI的环境变量

同样是在oneAPI的安装路径下,有一个setvars.sh的文件,这个文件包含了你所安装的所有oneAPI工具包的环境变量。如果只是临时使用Intel oneAPI,则只需要在每次使用之前执行命令source ~/intel/oneapi/setvars.sh intel64即可;若需要长期生效也不难,咱们只需要在/etc/profile或者~/.bashrc里面source它即可。如下图为我在~/.bashrc文件中设置oneAPI的环境变量的步骤:

2.1 修改用户环境变量文件

在~/.bashrc的末尾加入如下语句:

source /home/fosu/intel/oneapi/setvars.sh intel64

2.2 使环境变量立即生效

source ~/.bashrc

可以看到第一次source已经成功了,接下来就可以用 oneAPI 了。

随便测试了一下,已经都可以用了:

3. 激活Intel oneAPI的conda环境

也许你会问:我安装好了oneAPI的AI工具包,也设置好了环境变量,我要怎么使用oneAPI提供的优化版PyTorch以及Tensorflow呢?

经常使用Python搞开发或者搞机器学习的同学一定知道Anaconda,而oneAPI中提供的优化版Pytorch以及Tensorflow其实也是以Anaconda虚拟环境的形式提供给我们用的。

3.1 查看oneAPI提供的Anaconda虚拟环境

在激活了oneAPI环境变量之后,使用如下命令:

conda env list会发现Anaconda中多出来几个路径里面带有oneAPI的虚拟环境,如下图所示:

所以就清晰了,要使用oneAPI提供的优化版Pytorch或者Tensorflow,咱们只需要根据需求激活相应的Anaconda虚拟环境就可以了。

3.2 激活Intel oneAPI的Anaconda虚拟环境

为了演示,我激活了名为pytorch的oneAPI虚拟环境,并且查看这个虚拟环境里面都有些什么。

conda activate pytorch

conda list输出结果如下:

# packages in environment at /home/***/intel/oneapi/intelpython/latest/envs/pytorch:

#

# Name Version Build Channel

asn1crypto 1.4.0 py37h22e6299_2 file:///home/caibo/intel/oneapi/conda_channel

blas 1.0 mkl file:///home/caibo/intel/oneapi/conda_channel

bzip2 1.0.8 h88c068d_5 file:///home/caibo/intel/oneapi/conda_channel

certifi 2020.6.20 py37hefe589e_1 file:///home/caibo/intel/oneapi/conda_channel

cffi 1.14.3 py37h698c159_1 file:///home/caibo/intel/oneapi/conda_channel

chardet 3.0.4 py37h82fad40_4 file:///home/caibo/intel/oneapi/conda_channel

common_cmplr_lib_rt 2021.1.1 intel_189 file:///home/caibo/intel/oneapi/conda_channel

common_cmplr_lic_rt 2021.1.1 intel_189 file:///home/caibo/intel/oneapi/conda_channel

conda 4.8.4 py37h926af72_10 file:///home/caibo/intel/oneapi/conda_channel

conda-package-handling 1.4.1 py37he46c983_4 file:///home/caibo/intel/oneapi/conda_channel

cpuonly 1.0 0 file:///home/caibo/intel/oneapi/conda_channel

cryptography 3.2 py37h89119f4_1 file:///home/caibo/intel/oneapi/conda_channel

dpcpp_cpp_rt 2021.1.1 intel_189 file:///home/caibo/intel/oneapi/conda_channel

freetype 2.10.4 h9e62b58_0 file:///home/caibo/intel/oneapi/conda_channel

icc_rt 2021.1.1 intel_189 file:///home/caibo/intel/oneapi/conda_channel

idna 2.10 py37he452417_1 file:///home/caibo/intel/oneapi/conda_channel

intel-extension-for-pytorch 1.0.1 py3.7_cpu_0 file:///home/caibo/intel/oneapi/conda_channel

intel-openmp 2021.1.1 intel_189 file:///home/caibo/intel/oneapi/conda_channel

intelpython 2021.1.1 1 file:///home/caibo/intel/oneapi/conda_channel

jpeg 9b h024ee3a_2 file:///home/caibo/intel/oneapi/conda_channel

lark-parser 0.9.0 pyh9f0ad1d_0 file:///home/caibo/intel/oneapi/conda_channel

libarchive 3.4.2 hadfc6af_6 file:///home/caibo/intel/oneapi/conda_channel

libffi 3.3 h07ac4c1_13 file:///home/caibo/intel/oneapi/conda_channel

libgcc-ng 9.3.0 hdf63c60_101 file:///home/caibo/intel/oneapi/conda_channel

libpng 1.6.37 h17b3f18_7 file:///home/caibo/intel/oneapi/conda_channel

libstdcxx-ng 9.3.0 hdf63c60_101 file:///home/caibo/intel/oneapi/conda_channel

libtiff 4.1.0 h2733197_1 file:///home/caibo/intel/oneapi/conda_channel

libxml2 2.9.10 h9aba842_4 file:///home/caibo/intel/oneapi/conda_channel

lz4-c 1.9.2 h7708b8d_3 file:///home/caibo/intel/oneapi/conda_channel

lzo 2.10 ha2aa7bf_5 file:///home/caibo/intel/oneapi/conda_channel

mkl 2021.1.1 intel_52 file:///home/caibo/intel/oneapi/conda_channel

mkl-service 2.3.0 py37hfc5565f_6 file:///home/caibo/intel/oneapi/conda_channel

mkl_fft 1.2.0 py37hb9c2cde_4 file:///home/caibo/intel/oneapi/conda_channel

mkl_random 1.2.0 py37h90a4e38_4 file:///home/caibo/intel/oneapi/conda_channel

mkl_umath 0.1.0 py37hb46b53c_0 file:///home/caibo/intel/oneapi/conda_channel

ninja 1.9.0 py37hfd86e86_0 file:///home/caibo/intel/oneapi/conda_channel

numpy 1.19.2 py37h02626c5_0 file:///home/caibo/intel/oneapi/conda_channel

numpy-base 1.19.2 py37h591eb60_0 file:///home/caibo/intel/oneapi/conda_channel

olefile 0.46 py37_0 file:///home/caibo/intel/oneapi/conda_channel

opencl_rt 2021.1.1 intel_189 file:///home/caibo/intel/oneapi/conda_channel

openssl 1.1.1h h14c3975_0 file:///home/caibo/intel/oneapi/conda_channel

pillow 7.1.2 py37hb39fc2d_0 file:///home/caibo/intel/oneapi/conda_channel

pip 20.2.3 py37h56aae7b_1 file:///home/caibo/intel/oneapi/conda_channel

pycosat 0.6.3 py37h4822089_6 file:///home/caibo/intel/oneapi/conda_channel

pycparser 2.20 py37h310c2dd_2 file:///home/caibo/intel/oneapi/conda_channel

pyopenssl 19.1.0 py37h4200cf5_2 file:///home/caibo/intel/oneapi/conda_channel

pysocks 1.7.0 py37h4200cf5_2 file:///home/caibo/intel/oneapi/conda_channel

python 3.7.9 h7af079d_1 file:///home/caibo/intel/oneapi/conda_channel

python-libarchive-c 2.8 py37h4200cf5_14 file:///home/caibo/intel/oneapi/conda_channel

pytorch 1.5.0 py3.7_cpu_0 file:///home/caibo/intel/oneapi/conda_channel

pyyaml 5.3.1 py37h1acd8f6_0 file:///home/caibo/intel/oneapi/conda_channel

requests 2.24.0 py37h35012da_0 file:///home/caibo/intel/oneapi/conda_channel

ruamel_yaml 0.15.99 py37ha45e144_6 file:///home/caibo/intel/oneapi/conda_channel

setuptools 50.3.2 py37h4200cf5_0 file:///home/caibo/intel/oneapi/conda_channel

six 1.15.0 py37h65307dc_1 file:///home/caibo/intel/oneapi/conda_channel

sqlite 3.33.0 h88c068d_1 file:///home/caibo/intel/oneapi/conda_channel

tbb 2021.1.1 intel_119 file:///home/caibo/intel/oneapi/conda_channel

tbb4py 2021.1.1 py37_intel_119 file:///home/caibo/intel/oneapi/conda_channel

tcl 8.6.9 h14c3975_27 file:///home/caibo/intel/oneapi/conda_channel

tk 8.6.9 h88fb5b1_8 file:///home/caibo/intel/oneapi/conda_channel

torch-ccl 1.0.1 pypi_0 pypi

torch-ipex 1.0.1 pypi_0 pypi

torch_ccl 1.0.1 py3.7_cpu_0 file:///home/caibo/intel/oneapi/conda_channel

torchvision 0.6.0 py37_cpu [cpuonly] file:///home/caibo/intel/oneapi/conda_channel

tqdm 4.50.2 py37hf3d76cd_0 file:///home/caibo/intel/oneapi/conda_channel

urllib3 1.25.10 py37h9e79bd7_1 file:///home/caibo/intel/oneapi/conda_channel

wheel 0.35.1 py37h4a4c509_1 file:///home/caibo/intel/oneapi/conda_channel

xz 5.2.5 hcc43529_2 file:///home/caibo/intel/oneapi/conda_channel

yaml 0.1.7 h04e08d9_7 file:///home/caibo/intel/oneapi/conda_channel

zlib 1.2.11.1 hb8a9d29_3 file:///home/caibo/intel/oneapi/conda_channel

zstd 1.4.5 hdb51d2f_0 file:///home/caibo/intel/oneapi/conda_channel可以发现,这个虚拟环境使用了Python 3.7.9、Pytorch 1.5.0以及其他很多oneAPI提供的工具比如intel-openmp、intel-extension-for-pytorch,至于这些工具怎么用以及AI工具包的具体介绍和代码样例,大家可以直接去官网查看(https://software.intel.com/content/www/us/en/develop/tools/oneapi/ai-analytics-toolkit.html),文档很齐全,我后续也会逐渐深入。

3.3 使用oneAPI虚拟环境运行PyTorch程序

为了演示效果,这里使用我的环境运行oneAPI官网提供的一个PyTorch_Hello_World.py程序,具体代码如下:

#!/usr/bin/env python

# encoding: utf-8

'''

==============================================================

Copyright © 2019 Intel Corporation

SPDX-License-Identifier: MIT

==============================================================

'''

import torch

import torch.nn as nn

from torch.utils import mkldnn

from torch.utils.data import Dataset, DataLoader

'''

BS_TRAIN: Batch size for training data

BS_TEST: Batch size for testing data

EPOCHNUM: Number of epoch for training

'''

BS_TRAIN = 50

BS_TEST = 10

EPOCHNUM = 2

'''

TestDataset class is inherited from torch.utils.data.Dataset.

Since data for training involves data and ground truth, a flag "train" is defined in the initialization function. When train is True, instance of TestDataset gets a pair of training data and label data. When it is False, the instance gets data only for inference. Value of the flag "train" is set in __init__ function.

In __getitem__ function, data at index position is supposed to be returned.

__len__ function returns the overall length of the dataset.

'''

class TestDataset(Dataset):

def __init__(self, train = True):

super(TestDataset, self).__init__()

self.train = train

def __getitem__(self, index):

if self.train:

return torch.rand(3, 112, 112), torch.rand(6, 110, 110)

else:

return torch.rand(3, 112, 112)

def __len__(self):

if self.train:

return 100

else:

return 20

'''

TestModel class is inherited from torch.nn.Module.

Operations that will be used in the topology are defined in __init__ function.

Input data x is supposed to be passed to the forward function. The topology is implemented in the forward function. When perform training/inference, the forward function will be called automatically by passing input data to a model instance.

'''

class TestModel(nn.Module):

def __init__(self):

super(TestModel, self).__init__()

self.conv = nn.Conv2d(3, 6, 3)

self.norm = nn.BatchNorm2d(6)

self.relu = nn.ReLU()

def forward(self, x):

x = self.conv(x)

x = self.norm(x)

x = self.relu(x)

return x

'''

Perform training and inference in main function

'''

def main():

'''

The following 3 components are required to perform training.

1. model: Instantiate model class

2. optim: Optimization function for update topology parameters during training

3. crite: Criterion function to minimize loss

'''

model = TestModel()

optim = torch.optim.SGD(model.parameters(), lr=0.01)

crite = nn.MSELoss(reduction='sum')

'''

1. Instantiate the Dataset class defined before

2. Use torch.utils.data.DataLoader to load data from the Dataset instance

'''

train_data = TestDataset()

trainLoader = DataLoader(train_data, batch_size=BS_TRAIN)

test_data = TestDataset(train=False)

testLoader = DataLoader(test_data, batch_size=BS_TEST)

'''

Perform training and inference

Use model.train() to set the model into train mode. Use model.eval() to set the model into inference mode.

Use for loop with enumerate(instance of DataLoader) to go through the whole dataset for training/inference.

'''

for i in range(0, EPOCHNUM - 1):

model.train()

for batch_index, (data, y_ans) in enumerate(trainLoader):

'''

1. Clear parameters of optimization function

2. Do forward-propagation

3. Calculate loss of the forward-propagation with the criterion function

4. Calculate gradients with the backward() function

5. Update parameters of the model with the optimization function

'''

optim.zero_grad()

y = model(data)

loss = crite(y, y_ans)

loss.backward()

optim.step()

model.eval()

'''

1. User is suggested to use JIT mode to get best performance with DNNL with minimum change of Pytorch code. User may need to pass an explicit flag or invoke a specific DNNL optimization pass. The PyTorch DNNL JIT backend is under development (RFC link https://github.com/pytorch/pytorch/issues/23657), so the example below is given in imperative mode.

2. To have model accelerated by DNNL under imperative mode, user needs to explicitly insert format conversion for DNNL operations using tensor.to_mkldnn() and to_dense(). For best result, user needs to insert the format conversion on the boundary of a sequence of DNNL operations. This could boost performance significantly.

3. For inference task, user needs to prepack the model’s weight using mkldnn_utils.to_mkldnn(model) to save the weight format conversion overhead. It could bring good performance gain sometime for single batch inference.

'''

model_mkldnn = mkldnn.to_mkldnn(model)

for batch_index, data in enumerate(testLoader):

y = model_mkldnn(data.to_mkldnn())

if __name__ == '__main__':

main()

print('[CODE_SAMPLE_COMPLETED_SUCCESFULLY]')这是一个简单的模型训练demo,在虚拟环境中直接使用python PyTorch_Hello_World.py即可运行。而对于自己的程序在这个虚拟环境中也是可以直接运行的,如果虚拟环境中的库不能满足需求(比如一般还会需要matplotlib、pandas等),直接使用命令conda install安装即可。

4. 小结

本文介绍了Intel oneAPI的环境变量配置以及基本使用方法,打通了使用流程。总的来说Intel oneAPI的AI工具包使用很方便,提供的工具包有很多, 还有很多高级用法可以参考官网的文档(https://software.intel.com/content/www/us/en/develop/tools/oneapi/documentation/library.html?query=¤tPage=1&externalFilter=rsoftware:inteloneapitoolkits/intelaianalyticstoolkit)以及程序样例(https://github.com/oneapi-src/oneAPI-samples/tree/master/AI-and-Analytics)。